Key Points

- Development of AI could lead to autonomous weapons capable of killing "at an industrial scale", a new study says.

- The study’s authors also warned of health impacts from job losses as more types of work are automated.

- Powerful AI-driven surveillance systems can be used to oppress populations, the authors also caution.

The development of artificial intelligence (AI) needs to be paused because of the risk it poses to public health and safety, according to a group of doctors and public health experts.

Artificial intelligence could facilitate the development of autonomous weapons able to kill "at an industrial scale" without human supervision, according to a study published in the open access journal BMJ Global Health.

Governments can also use AI to create powerful surveillance systems, which can spread misinformation and social division, manipulate consumer choices, and even enable government oppression, the study's authors David McCoy and Frederik Federspiel said.

"We really need an international treaty and a global approach to regulation," professor at United Nations University Malaysia and study author David McCoy told SBS News.

"The United Nations and governments are really crucial to any form of effective regulation to prevent the misuse of artificial intelligence," he said.

More than 75 countries are currently using AI surveillance.

The study’s authors also warned of the health impacts of job losses as AI facilitates the automation of more types of work, given the association of unemployment with “adverse health outcomes and behaviour".

Creators of AI have touted its transformative potential, including in medicine and public health, but the group of doctors are joining calls for progress to be halted until regulation is able to keep up with its advances.

How does AI enable weapons development?

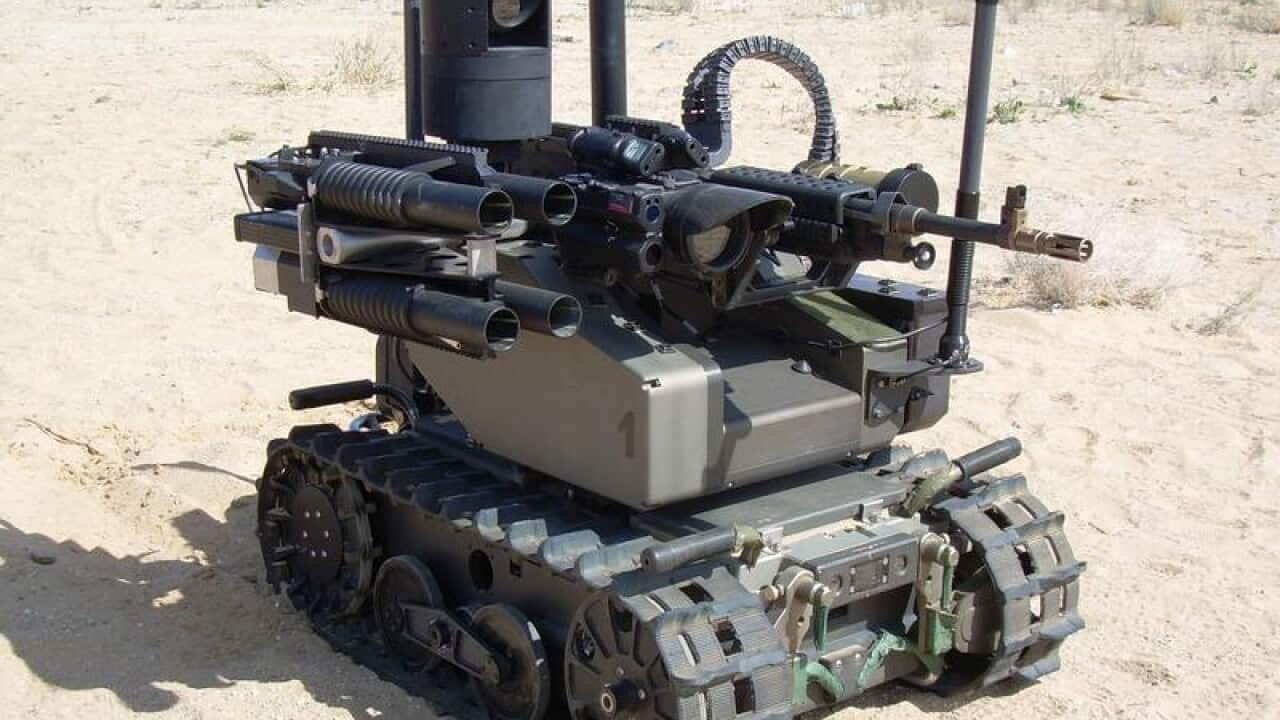

The study's authors warn that AI enables the development of Lethal Autonomous Weapon Systems (LAWS) capable of locating, selecting, and engaging human targets, all without human supervision.

"In theory, you could produce a kind of cargo van with hundreds of thousands of small autonomous weapons attached to drones that are capable of targeting in a more discriminate fashion," Professor McCoy said.

The technology for these kind of highly lethal weapons is relatively cheap, he added.

"We don't know exactly who has this kind of technology or who is developing this technology.

"But I think it's fair to say that this technology is being developed by governments across the world," he said.

In 2021, the United Nations held talks on autonomous weapons, which stopped short of launching negotiations on an international treaty to govern their use, and instead agreed merely to continue discussions.

How can AI facilitate the misuse of personal data?

The study authors said AI has the ability to rapidly clean, organise, and analyse massive data sets consisting of personal data, including images.

They pointed to the role of AI in subverting democracy in the 2013 and 2017 Kenyan elections, the 2016 US presidential election, and the 2017 French presidential election.

“When combined with the rapidly improving ability to distort or misrepresent reality with deep fakes, AI-driven information systems may further undermine democracy by causing a general breakdown in trust or by driving social division and conflict, with ensuing public health impacts,” the authors said.

AI-driven surveillance may also be used by governments to oppress people more directly, as in the case of China’s Social Credit System, they argued.

China’s Social Credit System combines facial recognition software and analysis of people’s financial transactions, movements, police records and social relationships, essentially allowing Beijing to sculpt its population’s behaviour using a set of rewards and punishments.

Last week a pioneering computer scientist known as said he had quit Google to speak freely about the technology's dangers, after realising computers could become smarter than people far sooner than he and other experts had expected.

In an interview with the New York Times, Geoffrey Hinton said he was worried about AI's capacity to create convincing false images and texts, creating a world where people will "not be able to know what is true anymore."

"It is hard to see how you can prevent the bad actors from using it for bad things," he said.