Key Points

- Geoffrey Hinton says he is worried about AI's capacity to create convincing false images and texts.

- He said it was "hard to see how you can prevent the bad actors from using it for bad things."

- CEOs of Google, Microsoft, OpenAI and Anthropic will meet with US Vice President Kamala Harris on Thursday.

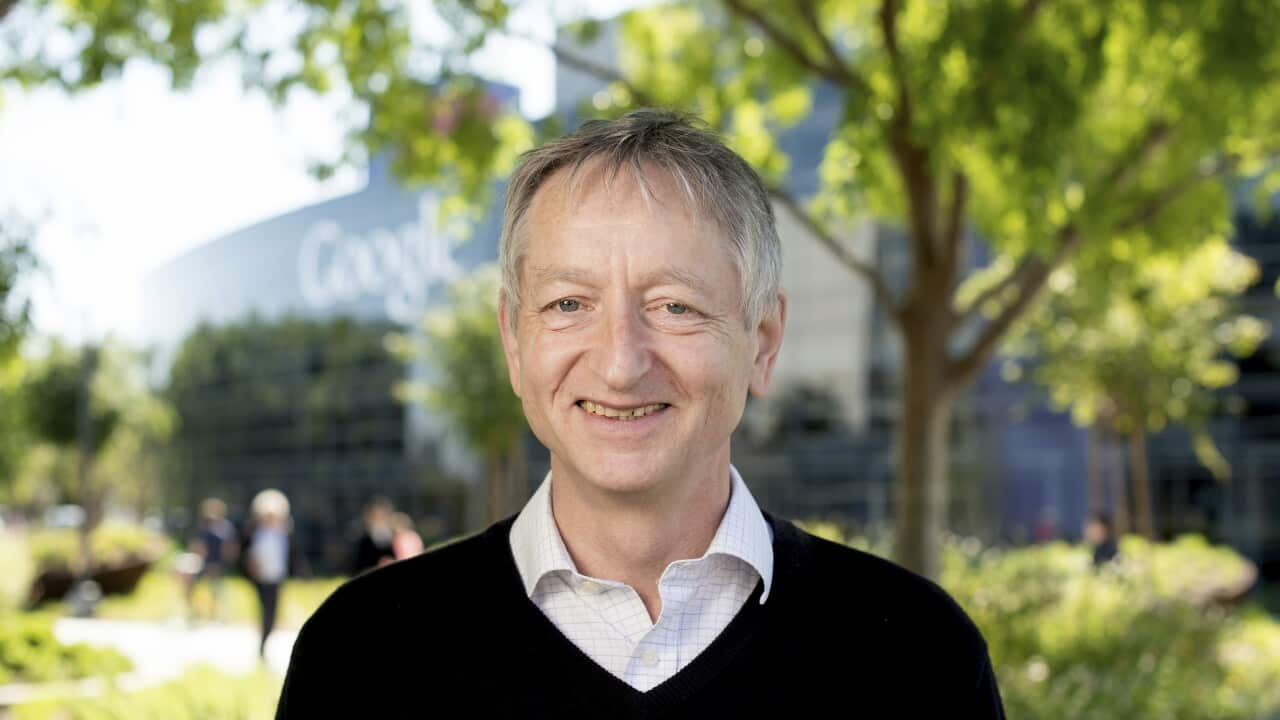

A pioneer of artificial intelligence said he quit Google to speak freely about the technology's dangers, after realising computers could become smarter than people far sooner than he and other experts had expected.

"I left so that I could talk about the dangers of AI without considering how this impacts Google," Geoffrey Hinton wrote on Twitter.

In an interview with the New York Times, Mr Hinton said he was worried about AI's capacity to create convincing false images and texts, creating a world where people will "not be able to know what is true anymore."

"It is hard to see how you can prevent the bad actors from using it for bad things," he said.

There are concerns the technology could quickly displace workers, and become a greater danger as it learns new behaviours.

“The idea that this stuff could actually get smarter than people — a few people believed that,” he told the New York Times.

“But most people thought it was way off. And I thought it was way off. I thought it was 30 to 50 years or even longer away. Obviously, I no longer think that."

In his tweet, Hinton said Google itself had "acted very responsibly" and denied that he had quit so that he could criticise his former employer.

Google did not immediately reply to a request for comment from Reuters.

The Times quoted Google’s chief scientist, Jeff Dean, as saying in a statement: “We remain committed to a responsible approach to AI. We’re continually learning to understand emerging risks while also innovating boldly.”

Is it time to regulate AI?

Since Microsoft-backed startup OpenAI released ChatGPT in November, the growing number of "generative AI" applications that can create text or images have provoked concern over the future regulation of the technology.

“That so many experts are speaking up about their concerns regarding the safety of AI, with some computer scientists going as far as regretting some of their work, should alarm policymakers," said Carissa Veliz, an associate professor in philosophy at the University of Oxford's Institute for Ethics in AI.

"The time to regulate AI is now," she said.

White House calls in tech firms to talk AI risks

According to a White House official, the chief executives of Google, Microsoft, OpenAI and Anthropic will meet with US Vice President Kamala Harris and top administration officials to discuss key artificial intelligence issues on Thursday.

The invitation seen by Reuters to the CEOs noted President Joe Biden's "expectation that companies like yours must make sure their products are safe before making them available to the public."

Concerns about fast-growing AI technology include privacy violations, bias and worries it could proliferate scams and misinformation.

In April, Mr Biden said it remains to be seen whether AI is dangerous but underscored that technology companies had a responsibility to ensure their products were safe.

He said social media had already illustrated the harm that powerful technologies can do without the right safeguards.

The administration has also been seeking public comments on proposed accountability measures for AI systems, as concerns grow about its impact on national security and education.

On Monday, deputies from the White House Domestic Policy Council and White House Office of Science and Technology Policy wrote in a blog post about how the technology could pose a serious risk to workers.

The companies did not immediately respond to a request for comment.

ChatGPT, an AI program with the ability to write answers quickly to a wide range of queries, in particular has attracted US lawmakers' attention as it has grown to be the fastest-growing consumer application in history with more than 100 million monthly active users.

"I think we should be cautious with AI, and I think there should be some government oversight because it is a danger to the public," Tesla Chief Executive Elon Musk said last month in a television interview.