The Human Rights Watch is calling for an international ban treaty on killer robots, claiming it to be the only pathway to deal with the “serious challenges raised by fully autonomous weapons.”

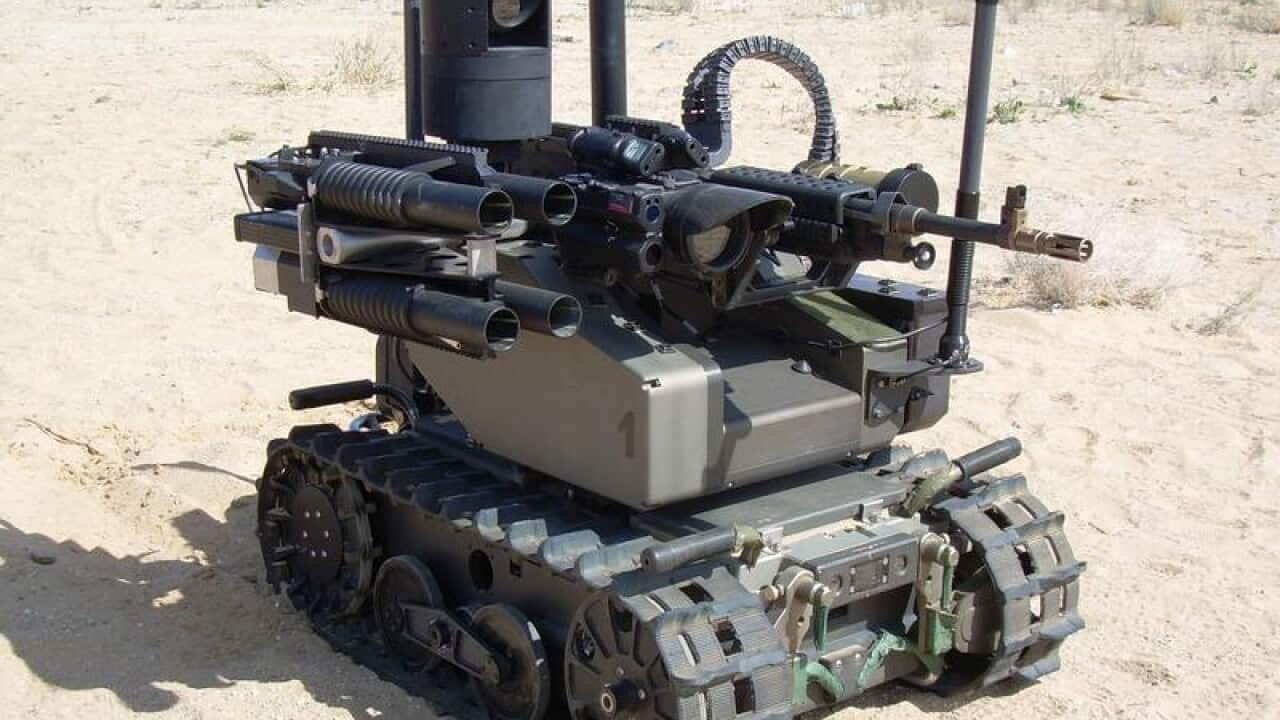

‘Killer robots’ is the term used for weapons systems that select and engage targets without meaningful human control. Human rights bodies, including the Human Rights Watch, say they are “unacceptable and need to be prevented.”

There are 30 countries calling for a ban on killer robots, according to a released today. Australia is not one of them.

Countries calling for ban:

Algeria, Argentina, Austria, Bolivia, Brazil, Chile, China (use only), Colombia, Costa Rica, Cuba, Djibouti, Ecuador, El Salvador, Egypt, Ghana, Guatemala, the Holy See, Iraq, Jordan, Mexico, Morocco, Namibia, Nicaragua, Pakistan, Panama, Peru, the State of Palestine, Uganda, Venezuela, and Zimbabwe.

The report analyses 97 countries' positions on killer robots. According to the report, Australia has participated in global talks on the use of automated weapons but has stopped short of supporting a worldwide ban. Australia is developing and testing various autonomous weapons systems, while the defence force has a policy that decisions to kill will never be made solely by machines. However, Australia has so-far not supported a new international treaty to address concerns over such weapons.

Australia is developing and testing various autonomous weapons systems, while the defence force has a policy that decisions to kill will never be made solely by machines. However, Australia has so-far not supported a new international treaty to address concerns over such weapons.

Protesters protest in Berlin, calling for a ban on the development and use of fully autonomous lethal weapons. Source: RTV

In March 2018, then foreign minister Julia Bishop said it is autonomous weapons.

Almost 100 nations have participated in the eight Convention on Conventional Weapons (CCW) meetings on lethal autonomous weapons systems from 2014 to 2019.

Austria, Brazil and Chile have proposed negotiations on a legally binding agreement to ensure humans have control over weapons.

Australia has participated in every CCW meeting on killer robots in 2014-2019. Thethe government has expressed interest in discussing applicable international humanitarian law, definitions, military utility, and humanitarian aspects of killer robots.

In March last year, , meeting that “autonomous technology has distinct benefits for the promotion of humanitarian outcomes and avoidance of civilian casualties.”

Last year, two Australian universities began a joint study on how to make autonomous weapons, such as future armed drones, behave ethically in warfare.

The $9 million project, undertaken by the University of NSW Canberra and the University of Queensland, is funded by the Defence Department.

Professor Toby Walsh is a leading artificial intelligence scientist at the University of NSW and has spoken at the UN on the issue, calling for a ban.

Despite a branch of his own university leading a project on killer robots, in 2017 he wrote an open letter detailing the potential threats that was signed by 20,000 others including Stephen Hawking, Elon Musk and Noam Chomsky.

“Sadly, Australia remains disappointing on this issue which is a pity because when it comes to arms control, we’ve often led the way,” Professor Walsh told SBS Dateline.

He said it is unhelpful that Australia's position suggests it is premature to ban these weapons and they might even have humanitarian benefits.

“We can influence diplomacy, way above than the size otherwise suggests, on this topic we are dragging our heels.”

Decisions at the CCW are by consensus, this means just one country can block an agreement sought by a majority.

The Human Rights Watch report singled out Russia and the United States as two military powers that have blocked progress toward regulations while investing heavily in military applications of artificial intelligence and developing air, land, and sea-based autonomous weapons systems.

"Removing human control from the use of force is now widely regarded as a grave threat to humanity that, like climate change, deserves urgent multilateral action," said Mary Wareham, arms division advocacy director at Human Rights Watch and coordinator of the Campaign to Stop Killer Robots.

“An international ban treaty is the only effective way to deal with the serious challenges raised by fully autonomous weapons.”