AI deepfakes can be used to manipulate images of politicians to spread disinformation. It can - and has - manipulated pictures of celebrities such as actor Scarlett Johannson into sexually explicit videos. But what if it wasn't Scarlett Johannson? What if it was you?

Sensity AI, a research company tracking deepfake videos, found in a 2019 study that 96 per cent were pornographic.

The topic of deepfake pornography returned to the public conversation last week when popular US Twitch streamer, Brandon "Atrioc" Ewing, was caught watching AI-generated material of several female streamers.

Atrioc issued a tearful apology video alongside his wife as he admitted to buying videos of two streamers who had previously considered him a friend.

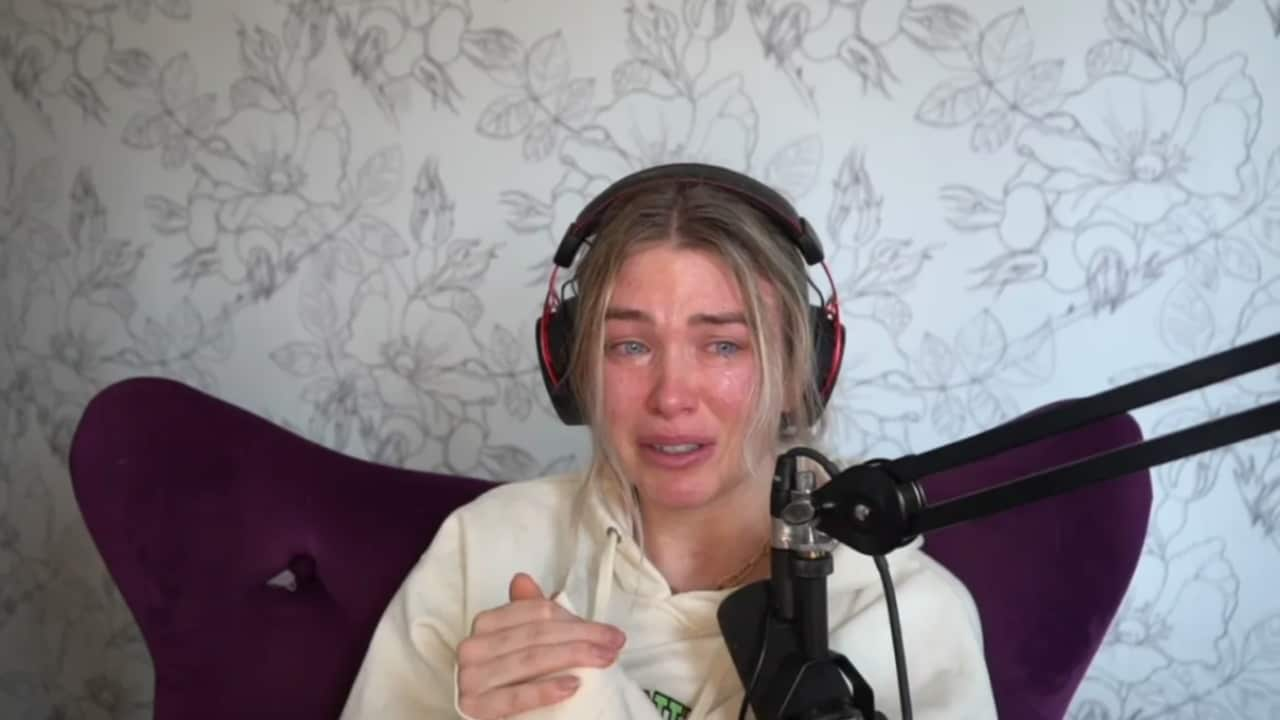

In the aftermath, popular Twitch streamer QTCinderella discovered she too had been doctored by the AI and the fake material was being sold on the website. The 28-year-old, whose real name is Blaire, was visibly distraught in her response.

Twitch streamer Atrioc in his apology video after being caught viewing deepfake pornographic material.

“F—k the f--king internet. F—k the constant exploitation and objectification of women, it's exhausting, it's exhausting. F—k Atroic for showing it to thousands of people.

"F—k the people DMing me pictures of myself from that website... This is what it looks like, this is what the pain looks like.

"If you are able to look at women who are not selling themselves or benefiting... if you are able to look at that you are the problem. You see women as an object."

Another victim of the incident, Pokimane, who has over seven million followers, called on them in a tweet to "stop sexualizing people without their consent. That's it, that's the tweet."

Popular female gamer Pokimane asked for viewers to stop sexualising her. Credit: Pokimane

"I sorta feel bad for him," "fair enough, you gotta do what you gotta do," and "what's the website? asking for a friend," are just some of many comments siding with Atrioc.

Consent activist Chanel Contos, who led the Teach us Consent campaign in Australia which resulted in a new national consent curriculum, said the incident and reactions from some viewers has been "deeply disturbing".

"Whilst we do need sturdy rules, regulations and laws regarding this, the only way to really prevent people from taking advantage of image-based abuse is by ensuring that we are embedding concepts of consent into people, especially younger generations who are going to be more inclined to use this sort of AI technology," Ms Contos told The Feed.

"AI technology does make it so realistic, it does make it that extra bit violating. Moving pictures can be a lot more jarring than a still image that's been clearly photoshopped."

What is a deepfake?

Deepfake (the word is a combination of deep learning and fake) media overlays an image or video on an existing image. It uses machine learning and AI to manipulate visuals and even audio, which can make it look and sound like someone else.

Warning: the following tweet contains coarse language

While deepfakes can enhance the entertainment or gaming industry, they have also attracted concern for their potential to create child sexual abuse material, celebrity pornographic videos, revenge porn, fake news, financial fraud, and also fake pornographic material of non-consenting people.

Last March, a deepfake of Ukrainian President Volodymyr Zelenskyy circulated on social media and was planted on a Ukrainian news website by hackers before it was debunked and removed.

The manipulated video appears to tell the Ukrainian army to lay down their arms and surrender the fight against Russia. Many believed the video was part of Russia's information warfare.

In a wide-ranging interview with Forbes magazine last week, the boss of the OpenAI company, which , said he has "great concern" about AI-generated revenge porn.

"I definitely have been watching with great concern the revenge porn generation that’s been happening with the open-source image generators," he said.

"I think that's causing huge and predictable harm."

What do Australia's laws say about deepfake pornography?

In a statement to The Feed, eSafety Commissioner Julie Inman Grant said: "Recently we have begun to see deepfake technology weaponised to create fake news, false pornographic videos and malicious hoaxes, mostly targeting well-known people such as politicians and celebrities.

“As this technology becomes more accessible, we expect everyday Australian citizens will also be affected.

“Posting nude or sexual deepfakes would be a form of image-based abuse, which is sharing intimate images of someone online without their consent."

Image-based abuse is a breach of the Online Safety Act 2021, which is the legislation administered by eSafety. Under the act, perpetrators are issued with a fine but laws in other jurisdictions can impose jail time.

Any Australian whose images or videos have been altered to appear intimate and are published online without consent can contact eSafety for help to have them removed.

“Innovations to help identify, detect and confirm deepfakes are advancing and technology companies have a responsibility to incorporate these into their platforms and services,” Ms Inman added in the statement.

Andrew Hii, a technology partner at the law firm Gilbert + Tobin, said federal laws protect those in Australia from this kind of abuse, but speculation around regulation remains.

"I think there is a question as to whether regulators are doing enough to enforce those laws and make it easy enough for people who believe that they're victims of these things to take action to stop this," he said.