TRANSCRIPT

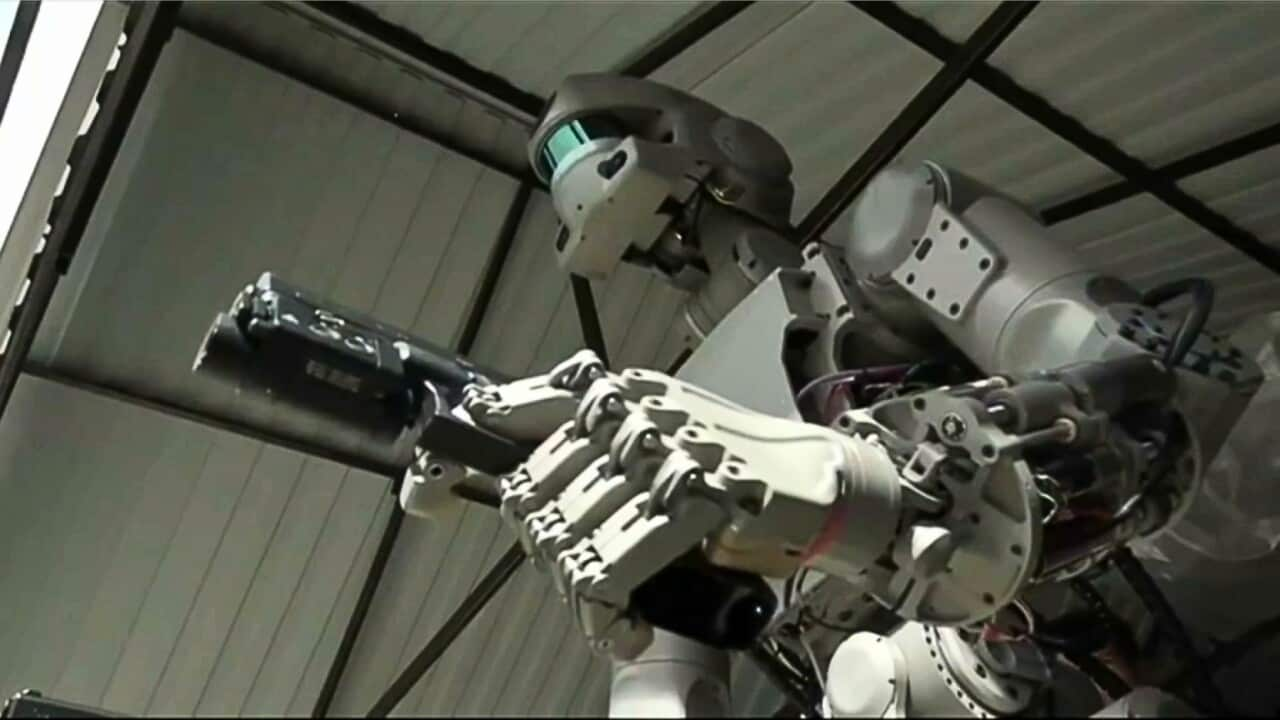

The way we wage war is changing, with experts warning of a new arms race to militarise artificial intelligence.

This is Professor Toby Walsh who is the chief scientist at the Artificial Intelligence Institute at the University of New South Wales.

“You're familiar with seeing drones flying across the skies of Afghanistan or Iraq, those are still semi-autonomous, there's still a human in the loop, there's fingers on the trigger. But increasingly those drones are being flown by computers and increasingly its computers that are making that final decision, who to target and who to kill on the ground.”

According to the United Nations, autonomous weapons systems 'locate, select and engage human targets without human supervision'.

These systems are also known as 'killer robots'-

And can rely on data such as weight, age and race to identify and attack.

This is Doctor Olga Boichak, sociologist and lecturer of digital cultures at the University of Sydney.

“AI is being used to kill people based on their data-fied footprint, we know that AI is being used by many militaries around the world to identify subjects that are a potential threat. It's called death by meta-data. And that is because someone's data-fied footprint is resembling that of a known terrorist.”

A United Nations report suggests the first autonomous drone strike occurred in 2020, where Turkish-made drones were deployed amid the military conflict in Libya.

Since then, this type of weaponry has played a role in other conflicts, including the war in Ukraine.

According to the Australian Institute of International Affairs Russia China, Israel, India, the United States, United Kingdom, and Australia are among the countries leading development of AI in military applications.

This is Professor Walsh

“Israel is a nation with a significant capability to develop arms, and the Gaza border itself is one of the most surveilled borders, with lots of that surveillance done with Autonomous AI powered systems.”

Handing over these life-or-death decisions to machines poses a moral dilemma - the developments prompting experts to call for the regulation of lethal autonomous weapons under the UN's Convention on Certain Conventional Weapons, and for them to be prohibited under the Geneva Convention in the same way chemical and biological weapons were in 1925.

This is Daniela Gavshon, the Australia director for Human Rights Watch

“Weapons that operate without any meaningful human control, don't have any human qualities of judgement and compassion which are such integral parts of making an analysis of what's a lawful target, and then whether or not to go ahead or pull back at different moments.”

Experts, like Professor Walsh, fear the reality of AI autonomous weaponry has not fully been realised - and are urging action before it is too late

“An example, one that probably terrifies me the most is a Russian autonomous underwater submarine, it's called Poseidon, it's the size of the bus, it's nuclear powered so it could travel almost unlimited distances at very high speed and its believed it will carry a dirty nuclear bomb, this could drive into Sydney harbour and autonomously an algorithm decides to start a nuclear war.”